THE PROMISE AND PERIL OF AI

Most engineers feel positive about the introduction of AI in the industry and believe it could help solve some of humanity’s biggest challenges, according to a new poll by Professional Engineering and IMechE. But the paths to success are riddled with danger, respondents say – and many in the profession could face losing out entirely.

On 30 November 2022, the world changed forever. The release of ChatGPT opened a window into a new future, in which human-like intelligence could be replicated – and maybe, one day, surpassed.

From image generation to voice synthesis, data analysis to copywriting, the wave of AI-based tools that have flooded the internet in the last two years are capable of tasks that, until recently, were only possible for humans. And, rather than needing hours or days to complete a job, they can do them in a matter of seconds.

This seemingly sudden lurch forward has left many of us wondering about our place in the world of work. Previously solid professional foundations might now seem cracked and in need of renovation – or, for those with expertise and a plan, new paths to success have emerged.

Nowhere is that balance more evident than in engineering. The highly-skilled workforce is better placed than perhaps any other to take advantage of new tools for productivity and efficiency gains. It is also at risk of job cuts and a loss of skills as roles transition to AI.

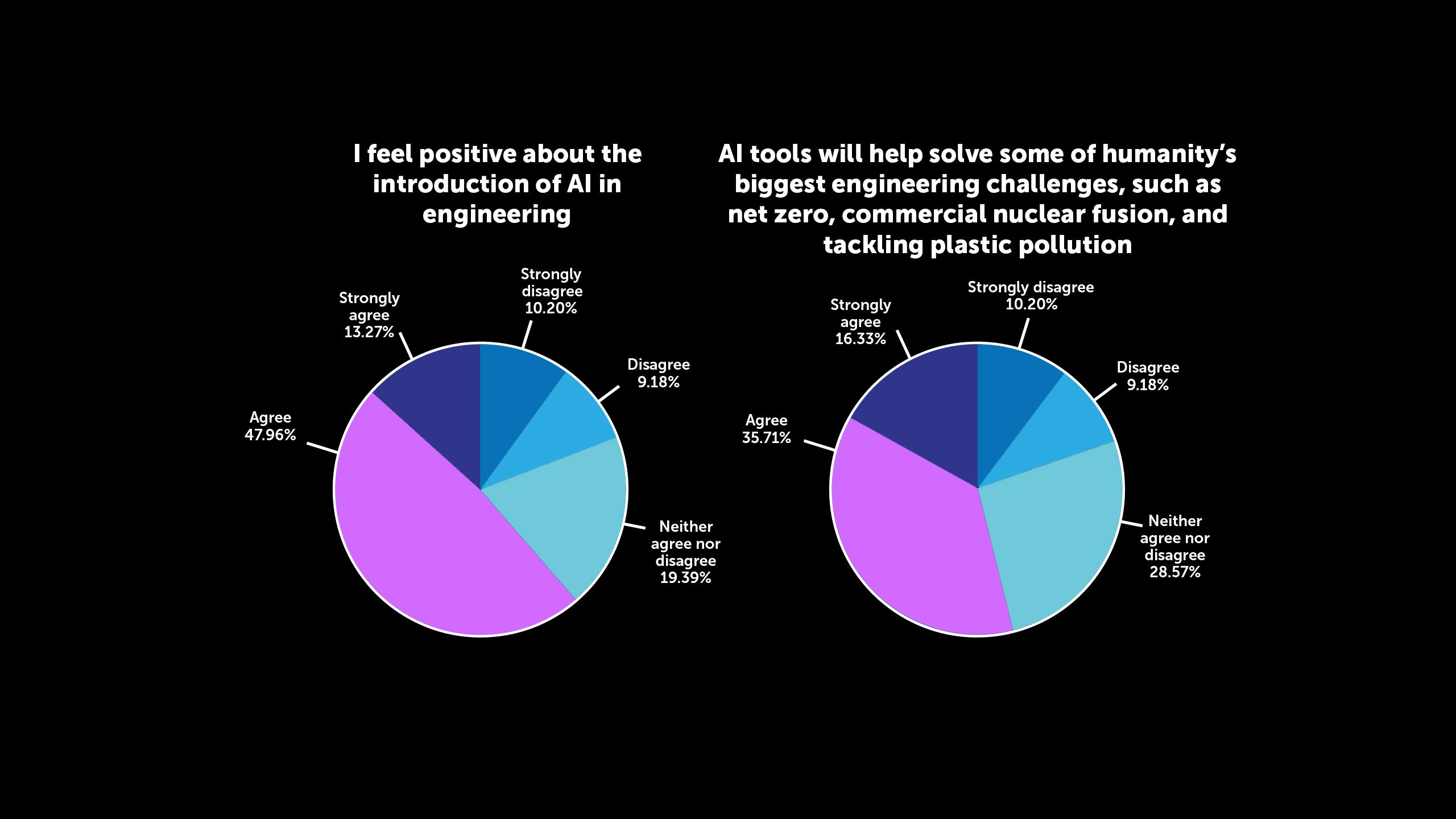

Against that backdrop, Professional Engineering and IMechE asked readers about their hopes and fears, receiving 125 responses from engineers working across the industry. The findings reveal a nuanced view – more than three-fifths of respondents (61.3%) feel positive about the introduction of AI in engineering, and more than half (52%) believe AI tools will help solve some of humanity’s biggest engineering challenges, such as net zero, commercial nuclear fusion, and tackling plastic pollution.

The way ahead is fraught with danger, however. While many believe AI tools could help them in their work, engineers who answered have widely-shared fears about job numbers, over-reliance on opaque technology, cybersecurity, and the future of the profession itself.

Early adopters

Less than two years after ChatGPT was released to an unsuspecting public, the survey shows that AI tools are already embedded in engineering businesses. More than two-fifths (41.9%) of respondents’ organisations have introduced use of AI or AI-related tools, with plans to do so at a further 21%.

Those figures are “slightly higher than I would have anticipated,” says Alan King, IMechE head of global membership strategy and author of recent IMechE paper “Harnessing The Potential of AI in Organisations”. “In my own conversations with organisations, I don't always get a sense that they've moved that quickly.”

That could be to do with the accessibility of some tools – which can be accessed in web browsers or through existing software suites – and how straightforward it can be to start implementing them.

“There are certain tools that have a low barrier to entry, low friction to implement into the business,” says King. “Also I think perhaps engineering organisations in general are a little bit more inquisitive than some other sectors. So perhaps they're more ready to adopt.”

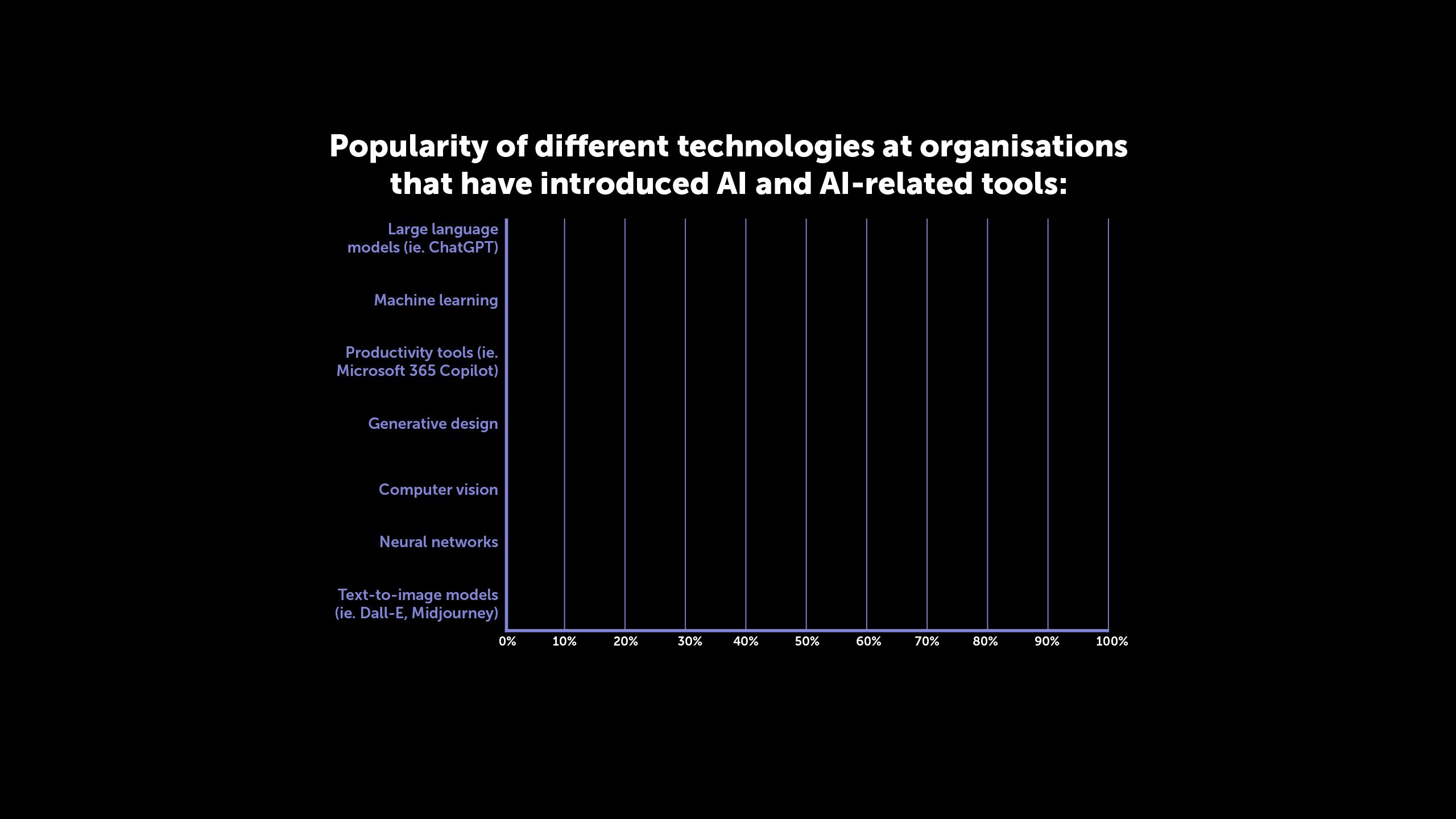

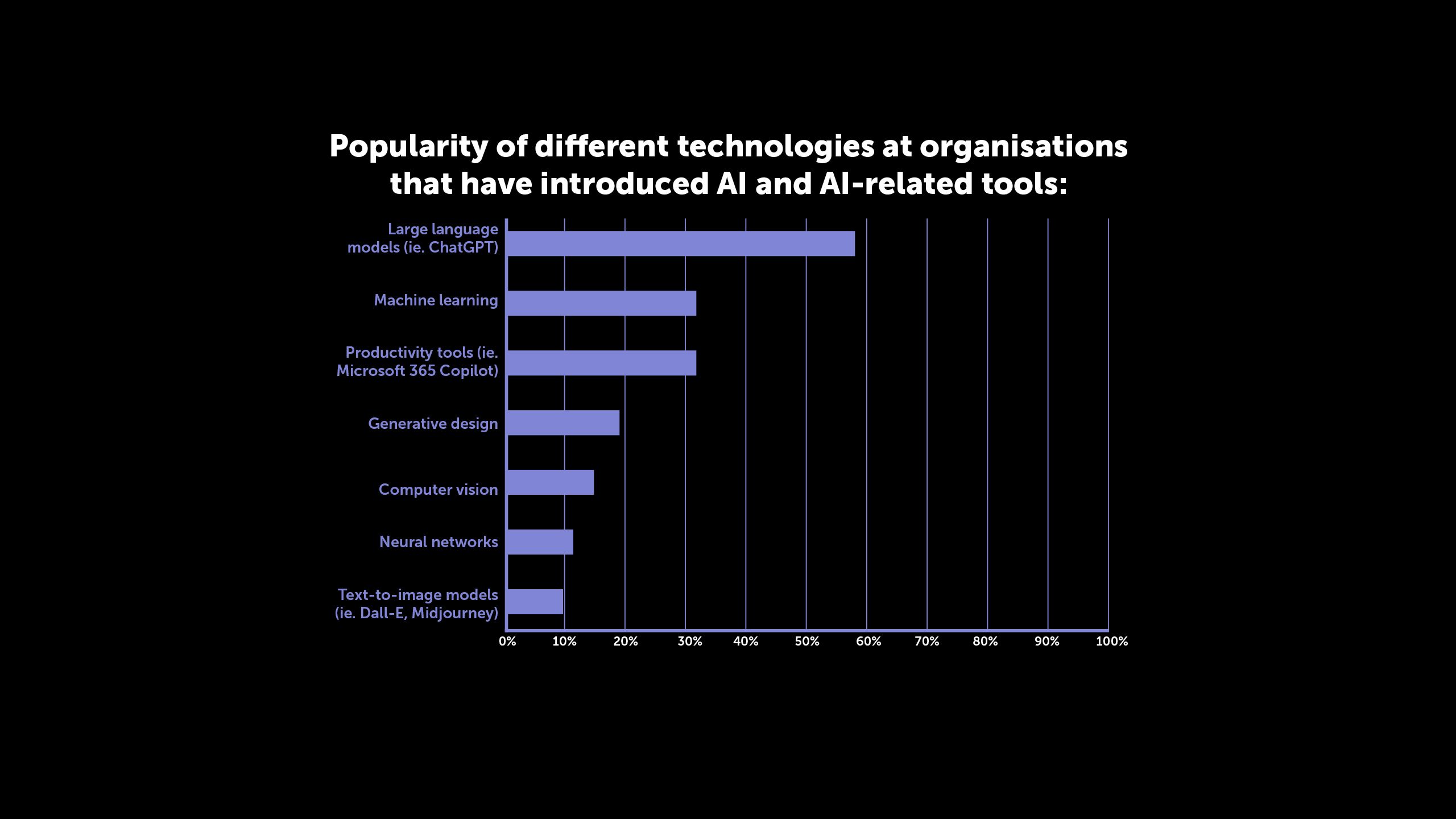

At the organisations that have done so, 57.7% have introduced them in engineering teams, while 42.3% only use them elsewhere. Large language models (LLMs) such as ChatGPT are by far the most-used AI-related tools, used by 58.4% of the organisations that have already adopted them. Machine learning, and productivity tools (such as Microsoft 365 Copilot), are the second most popular choices, each picked by 32.5%.

AI-related techniques that are typically associated with engineering are used by fewer organisations. Generative design, which uses simulation to improve the efficiency of designs, is used by 19.5%. Computer vision (15.6%) and neural networks (11.7%) are used by even fewer.

AI wishlist

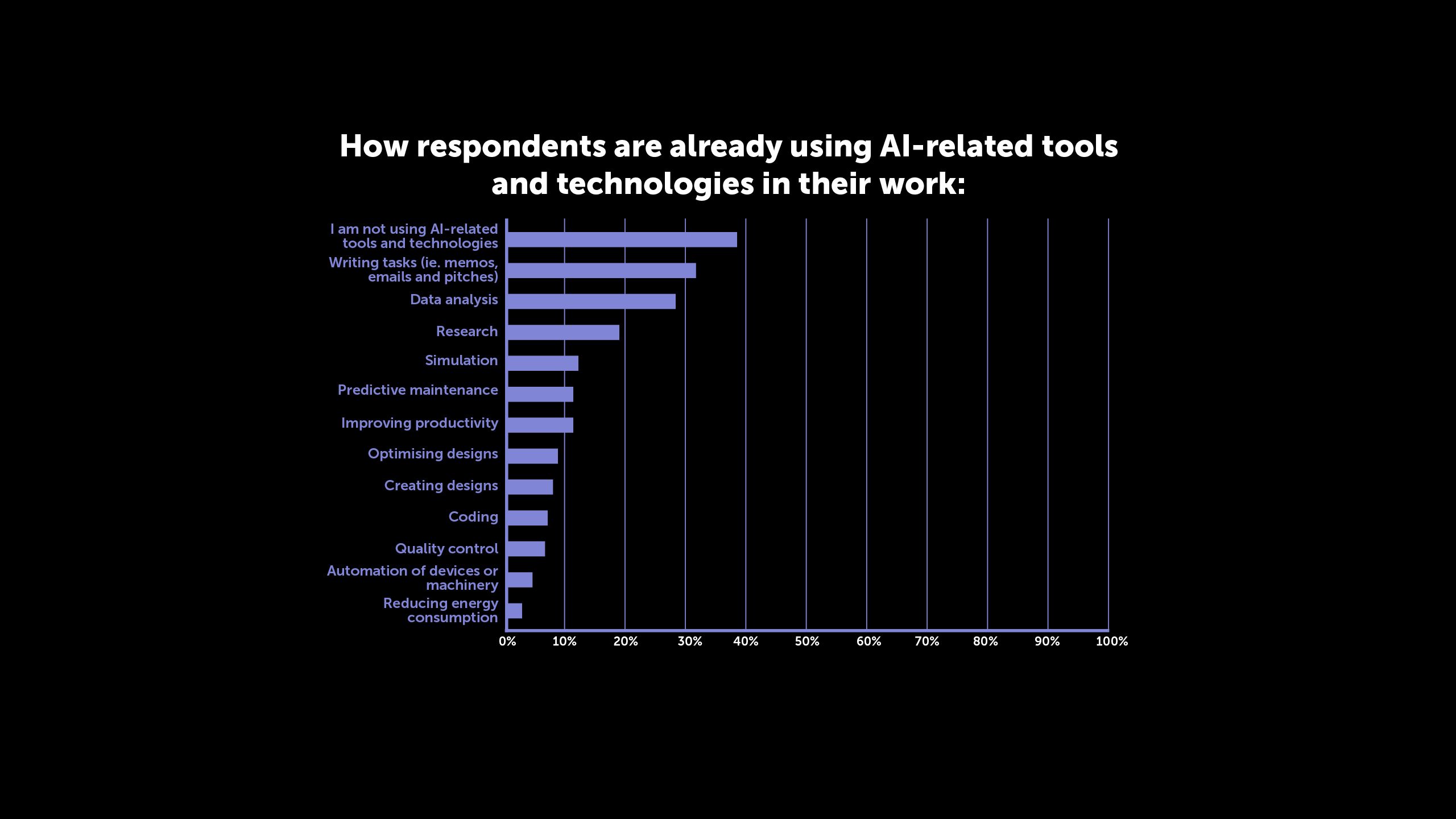

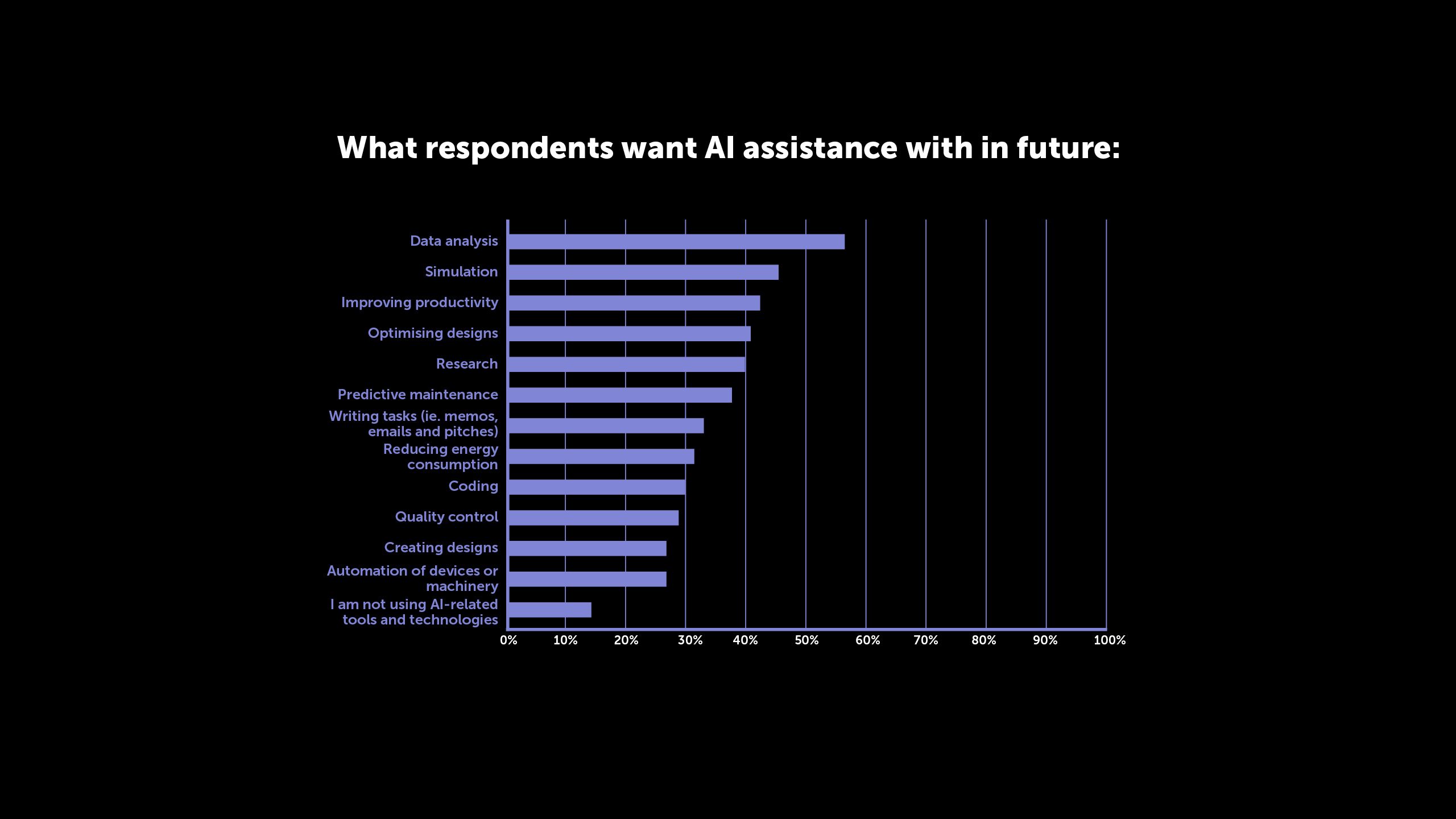

On an individual level, 32.4% of respondents use AI tools for writing tasks, such as memos, emails and pitches. The second most popular use is data analysis, picked by 27.6% – and that application is set to expand, with 57.1% of respondents saying they would like AI assistance with data analysis in future, more than for any other task.

“Until now, data analysis has not been necessarily that big a deal with LLMs. Because they are language models, sometimes they struggle with data analysis. You can put in a large corpus of text or information, and when it comes to analysing the data, it can be less than perfect,” says King.

“We're beginning to see the models start to get ramped up, to become better at data. So I expect in the future the engineers in the survey will get their wish and these models will become exponentially better at doing this.”

Simulation (45.7%) and improving productivity (42.9%) are the next most wished-for future applications, ahead of optimising designs (41%), research (40%), predictive maintenance (37.1%) and writing tasks (32.4%), suggesting that tools will be used for more sophisticated tasks as they mature.

Such widespread intention to adopt AI will mean some big changes – some good, some less so. On the plus side, almost two-thirds (63.6%) of respondents say repetitive work will be automated. More than half (53.5%) say engineering work will become more efficient, while half (50.5%) say engineers will have more time to focus on creative work.

“AI can be useful, but I think we need to consider carefully where and how it is used – and also bear in mind that ‘rubbish’ inputs will probably equal ‘rubbish’ out,” says one respondent.

‘The long-term outcomes could be terminal’

For many people, however, the potential opportunities are weighed against some major risks. More than a third of respondents (37.4%) believe some engineering roles will be replaced entirely by AI, with 28.3% saying the number of employed engineers will decrease – more than the number (18.2%) who believe it will increase.

Asked if they trust companies to introduce AI in a way that maintains the current level of engineering jobs, 40.8% either strongly disagree (13.3%) or disagree (27.6%).

“There's probably a certain degree of inevitability there. Commercial organisations follow the money, don't they? But I think there's an opportunity for a balanced approach,” says King.

“[They] should be looking at how we can actually do more with this technology and accelerate the capability of the organisation, rather than just saving some money.”

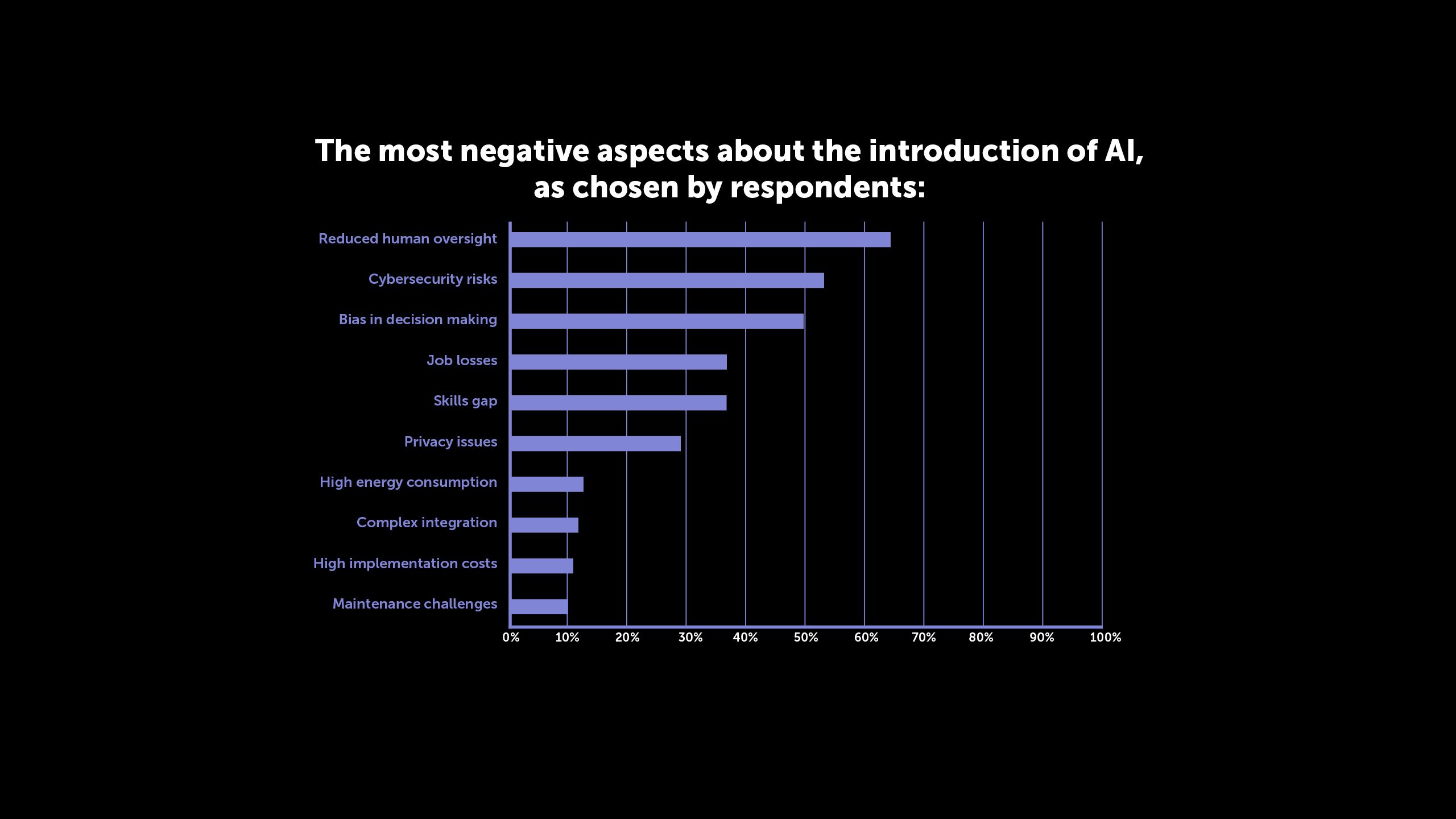

As AI rolls out, less scrupulous organisations might see it as a way to cut engineering input in important work. Perhaps inevitably, two-thirds of people (66%) say reduced human oversight is their main concern.

“I think there are opportunities, particularly machine learning and generative AI,” writes an automotive engineer. “A computer should be able to more easily and more quickly identify patterns and check against known problems. On the other hand, human nature will encourage people to believe blindly the results of any AI task, which could be a problem.”

Engineers will still need to be able to understand how systems work and to check that outputs are reasonable, another respondent says, even when faced with systems that appear to be ‘black boxes’ from the outside – but some fear that verification and validation of designs will slide as engineering skills are lost.

“Will it be able to replicate the intuitive wisdom of humanity? Certainly not, in the early stages of its evolution. Will it ultimately lead to the degradation of the knowledge base of the human, especially the engineer? I think this is highly probable,” writes one respondent.

“The road to AI’s full implementation across all aspects of society is fraught with danger. Unless it is navigated with an appropriate level of skill, understanding and philosophical appreciation, the long-term outcomes could well be terminal for humanity in its present form.”

For some, the concerns are overwhelming – “Thank God I am retiring soon,” says one mechanical engineer.

Critical decisions

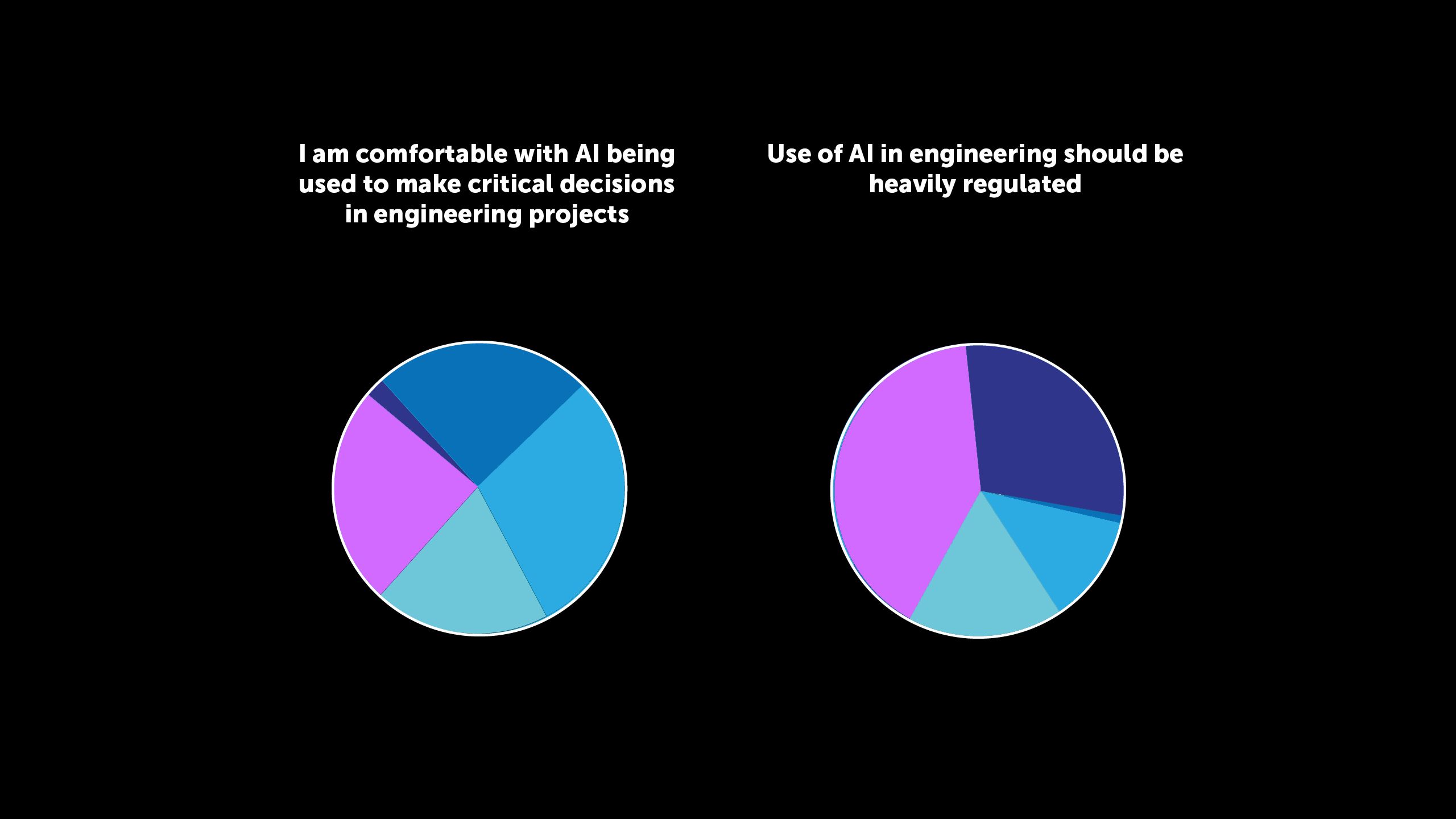

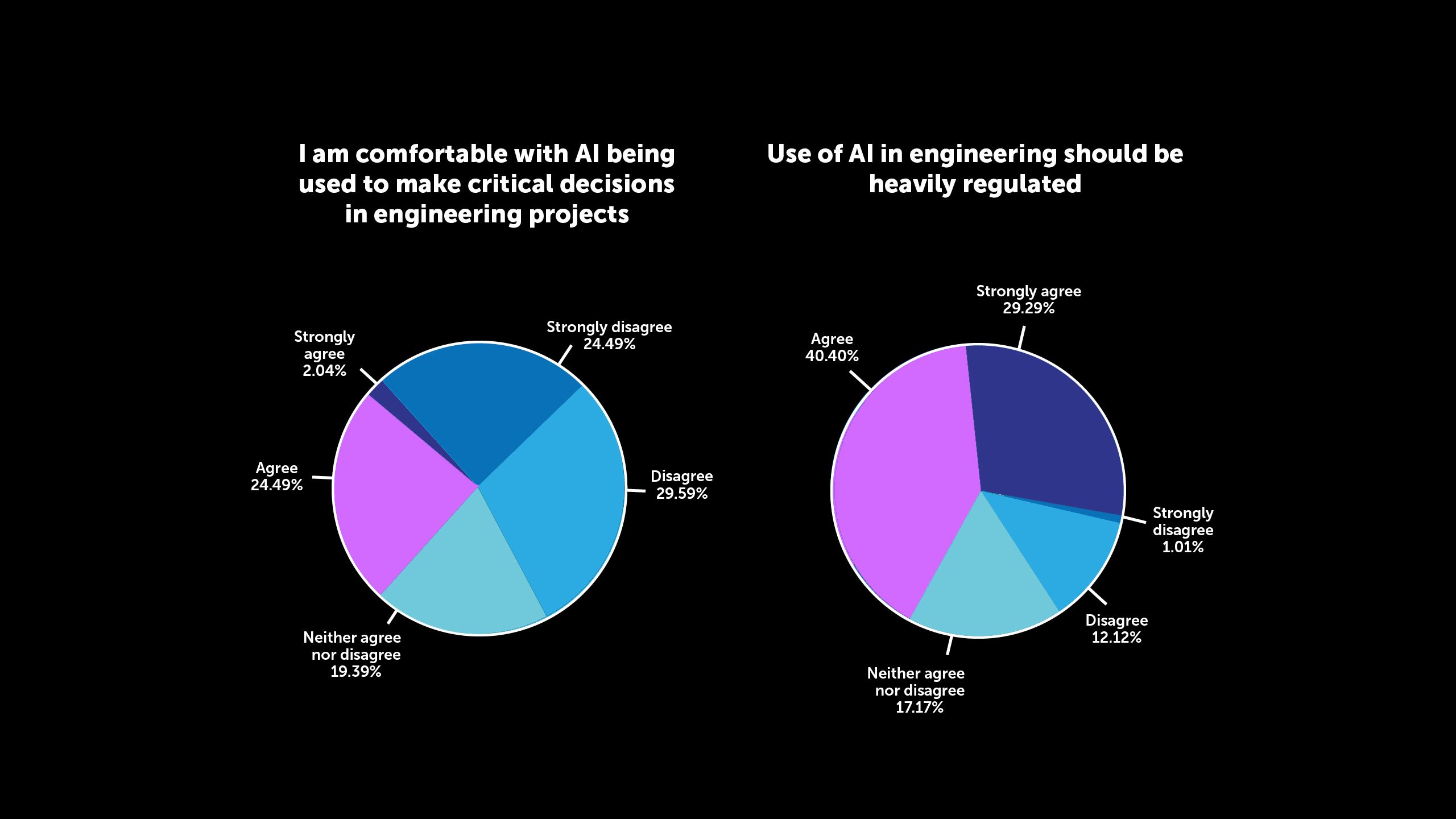

After reduced human oversight, cybersecurity risks (50.5%) and bias in decision making (47.4%) are the biggest concerns for respondents. Most (54.1%) are not comfortable with AI being used to make critical decisions in engineering projects.

With the number and severity of potential concerns, desire for regulation is unsurprising. More than two-thirds (69.7%) either agree (40.4%) or strongly agree (29.3%) with heavy regulation of the use of AI in engineering – but it might be easier said than done, says King.

“Often by the time regulators work out what needs to be put in place… the technology has moved on to the next stage anyway. So it's difficult, there's going to be a certain degree of self-regulation,” he says.

“I don't think you can rely on governments or external organisations to do it for you. That's not to take away the responsibility of governments – they obviously need to think about it, but they need to think about it fast, and maybe the current model, the current process in how they regulate, actually isn't fit for purpose in a world where technology is moving this fast.”

Partial recall

Despite the concerns, the need for regulation, and the risk to jobs, one thing is abundantly clear from the survey – the AI roll-out is already well underway in engineering, and there are no signs of it slowing down.

Engineers will need new skills and specialisms to survive and thrive in this brave new world. Coding, prompt engineering and critical thinking should be top of the pile, according to respondents.

“We will have to be more questioning on the output of computer models – how do we see the full set of input information? And if we lose sight of this, how will we have confidence in the results? There will be a few product recalls before we learn how to use AI effectively,” says one engineering manager.

“Whilst some previously required basic skills will no longer be emphasised, ability to understand the downsides of AI technology available at that point in time will surely be required,” says another engineer. “I am sure the future generation of engineers will rise to the occasion, but us ‘old school’ engineers cannot guide in this particular area of engineering. I still believe that the basic and fundamental skills of engineering are necessary to be able to utilise the AI technologies, but we shall see.”

Effective written communication with systems “will become the programming language of the future,” says King. “Engineers and others will talk to systems and those systems will build, develop and create what that individual is asking it to do. They won't need to use Python or other programming languages… it will be done through iteration, through conversation.”

Engineers who develop expertise in the area now can gain a “natural advantage” over those who choose not to, he adds. “In this early phase of AI development, it won't be the AI that replaces you, it will be people who know how to use the AI systems well.”

Whatever the future holds, one thing is certain – the professionalism, ethical consideration, imagination and real-world experience of engineers will always be in-demand.

To read a full interview about the results with Alan King, subscribe to the Professional Engineering Weekly newsletter.